Escalating concerns in AI for 2023 – and what to do about them.

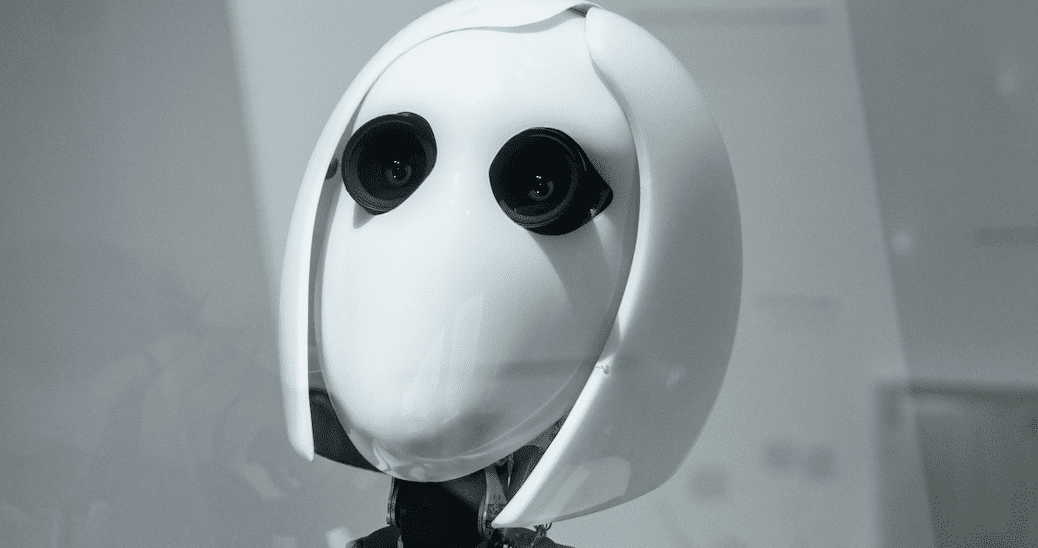

When people think of artificial intelligence, what comes to mind is a cadre of robots uniting as sentient beings to overthrow their masters. AI has woven its way into the daily lives of consumers, arriving in the form of good recommendation engines for online shopping, recommending solutions for customer service questions from the knowledge base, or suggestions on how to fix grammar when writing an email.

According to McKinsey’s “The State of AI in 2021” report, 57 per cent of companies in emerging economies had adopted some form of AI, up from 45 per cent in 2020. In 2022, an IBM survey found that, though AI adoption is gradual, four out of five companies plan to leverage the technology at some point soon.

The expectation is for industry to further embrace AI to continue software evolution. Users will witness the technology providing contextual understanding of written and spoken language, helping arrive at decisions faster and with better accuracy, and telling the bigger picture story behind disparate data points in more useful and applicable ways.

AI is going to move the needle for enterprises in 2023, but it is not without mounting concerns. Privacy, or the lack thereof, will likely remain a central fear among consumers. AI training under the current processes also have a likelihood of biases from misunderstanding spoken language or skewing data points. Simultaneously, the media and international governance have not caught up to where AI currently sits and is headed.

AI FULLY ENTERS THE MAINSTREAM

Mainstream adoption of AI is on the way with devices, apps and employee experience (EX) platforms likely to come equipped with AI out of the box. No longer will consumers be able to opt-in, which will accelerate and heighten concerns within AI that exist in the mainstream already. Privacy reigns supreme given the volume of public data breaches in recent years, including LinkedIn, MailChimp and Twitch, with consumers understandably wary of giving out personal information.

One of the central issues in privacy is that there isn’t any consensus on what best practices look like across the industry, it’s tough to garner data if the concept of ethical collection is fluid. AI technology is still in its infancy and governance has not yet matured to the point where there is consistency across companies.

To prepare for the inevitability of AI-first technology, companies could demonstrate full transparency by providing easy access to their privacy policies— or, if none exist, compose them as soon as possible and make them readily available to view on the company’s website.

Granular language, while generally frowned upon in communicating with a non-tech savvy audience, is welcome in this instance, as consumers with a full understanding of how their data gets used are more likely to share bits and pieces.

BIASES IN AI MUST BE ELIMINATED

Biases are often invisible, even if their effects are pronounced, which means their elimination is a difficult thing to guarantee. And, despite its advanced state, AI currently remains just as prone to biases as its human counterparts.

Biases are usually introduced early in the process. AI needs to be trained, and many companies opt for either purchasing synthetic data from third-party vendors— prone to distinct biases—or have it comb the general internet for contextual clues. However, no one is regulating or monitoring the world wide web for biases, and they’re likely to creep into an AI platform’s foundation. Financial investments in AI aren’t likely to trivialize anytime soon, so it’s of particular importance to establish processes and best practices to scrub as many biases, known or unknown, as quickly as possible.

One of the most effective safeguards against bias is to install a human safeguard between the data collection and processing phases of AI training. For example, at Zoho, some employees join the AI in combing through publicly available data to first scrub any trace of personally identifiable data—not only to protect these individuals but to ensure only crucial pieces of information make it through. Then, the data is further distilled to include only what’s relevant. An important thing to remember about bias is that it remains an evolving concept and a moving target, particularly as access to data improves. That’s why it’s essential for companies to ensure they are routinely scanning for new information and accordingly updating their criteria for bias.

THE MEDIA NARRATIVE REMAINS RELENTLESS

At the center of the above two issues sits the media, who are prone to repeat and reemphasize two conflicting narratives. On the one hand, they report that AI is a marvelous piece of technology with the potential to revolutionize our daily lives in both obvious and unseen ways. On the other, though, they continue to insinuate that AI technology is one step away from taking people’s jobs and declaring itself supreme overlord of Earth. As AI technology becomes more ubiquitous, expect the current media’s approach to remain mostly the same. It’s reasonable to anticipate a slight increase in stories about data breaches, though, as more access to AI will lead to a greater possibility that a consumer could find themselves affected. This trend could exacerbate a bit of a catch-22: AI cannot truly improve without increased adoption, yet adoption numbers are likely to stagnate due to lags in technology improvement.

CUSTOMERS GUIDE THE FUTURE OF AI

Best of all, a strong customer base in 2023 opens lines of communication between vendor and client. Direct, detailed feedback drives relevant, comprehensive updates to AI. Together, companies and their customers can forge an AI-driven future that pushes the technology envelope while remaining responsible with safe, secure, and unbiased data collection. Just don’t tell the robots.